Do you want to scrape some data from the website, but you are not ready to deploy your web scraper to the cloud and pay for page requests? Then, perhaps, the more appropriate option for you is to download the scraper, run it on your computer or server and get the data in the desired format.

It can be easily accomplished with new Diggernaut’s compile service options. You can now compile (create an executable program) Digger for Windows, Linux, and MacOS. It let you run your diggers outside of our cloud, on your computer or server so you can save on Diggernaut’s account resources because you do not spend when you use self-hosted digger.

Besides, the compiled diggers take up very little space (about 20 MB), as well as consume very few computer resources (~ 10-30 MB of RAM and 1-3% of the CPU).

Compilation service stays free during the beta phase, after the release our paid subscribers keep using compile service for free, while free users shall be able to buy compilation credits.

How does it work? Everything is straightforward. You create a digger, write a configuration for it (or use a ready-made you already have). As you probably already know, there are three ways to do it: use our Excavator app, write a configuration using our meta-language, or hire our or third-party developer to create a configuration for you. After you create or receive a Digger configuration, save it to the digger. Then launch the digger in debug mode to make sure it works correctly. While digger is in debug mode, resources are free, so you need not worry that you spend all the resources you have. If digger is working correctly and the data collected is in a good state, you can proceed with compilation.

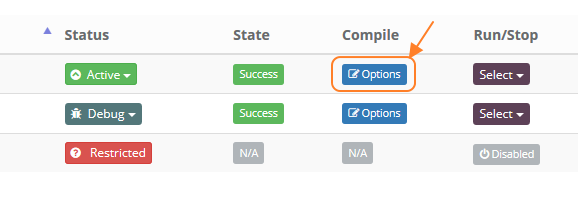

To do it, go to the digger list, find your digger and click the “Options” button in the “Compile” column.

There is a new panel with the name

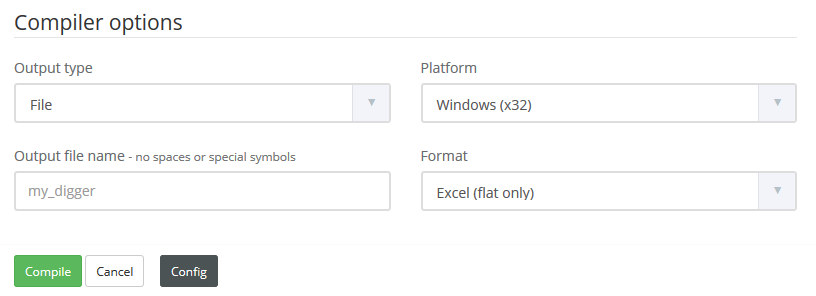

In the left pane, you shall see the screen with compilation settings.

First, you have to choose how digger output the data. You can output to a file or the console. To output data to a file, select the File option in the “Output Type” field, to output to the console – StdOut.

If you choose the output to a file, you have to specify the file name in the “Output File Name” field.

In the Format field, you must select the format you need. There are currently available 4 types: Excel, CSV, JSON, and XML. Excel and CSV do not support nested data structures, so before you use them, make sure that your data is flat (the root objects has no nested objects, only fields). If you need some other format, please contact us, and we try to add it.

Finally, you must choose a platform, where you want to run your digger, in the last field “Platform”. Currently, we support Windows, MacOS, and Linux for x86(32bit) and x64(64bit). If you need any other platform, please contact us, and if the compiler supports this platform, we add it as soon as possible.

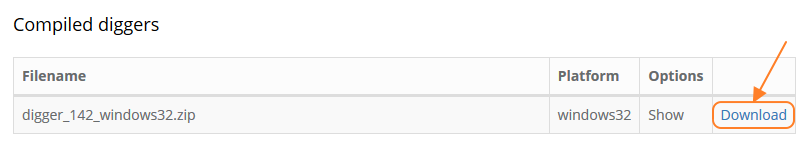

After you configure the compiler, click the Compile button and in a few dozen seconds you shall see a digger compiled in the table on the right side.

You can download it by clicking on “Download” link and run it at your computer. A download link is valid for 7 days, then compiled digger is removed.

What happens if compiled scraper stops to work correctly, e.g., if the website changed its structure and data scraped wrongly now? You can always get back to your Diggernaut account, launch the digger in debug mode, see what is wrong, fix it and compile revised version. Alternatively, ask one of our or third-party developers to help you.

Happy scrapping!

I found it very useful for my current tasks. Nice work, guys! Thanks!